What is Langfuse? Langfuse is an open source LLM engineering platform that helps teams trace API calls, monitor performance, and debug issues in their AI applications.

Tracing LangChain

Langfuse Tracing integrates with LangChain using LangChain Callbacks (Python, JS). Thereby, the Langfuse SDK automatically creates a nested trace for every run of your LangChain applications. This allows you to log, analyze and debug your LangChain application. You can configure the integration via (1) constructor arguments or (2) environment variables. Get your Langfuse credentials by signing up at cloud.langfuse.com or self-hosting Langfuse.Constructor arguments

Environment variables

Tracing LangGraph

This part demonstrates how Langfuse helps to debug, analyze, and iterate on your LangGraph application using the LangChain integration.Initialize Langfuse

Note: You need to run at least Python 3.11 (GitHub Issue). Initialize the Langfuse client with your API keys from the project settings in the Langfuse UI and add them to your environment.Simple chat app with LangGraph

What we will do in this section:- Build a support chatbot in LangGraph that can answer common questions

- Tracing the chatbot’s input and output using Langfuse

Create Agent

Start by creating aStateGraph. A StateGraph object defines our chatbot’s structure as a state machine. We will add nodes to represent the LLM and functions the chatbot can call, and edges to specify how the bot transitions between these functions.

Add Langfuse as callback to the invocation

Now, we will add then Langfuse callback handler for LangChain to trace the steps of our application:config={"callbacks": [langfuse_handler]}

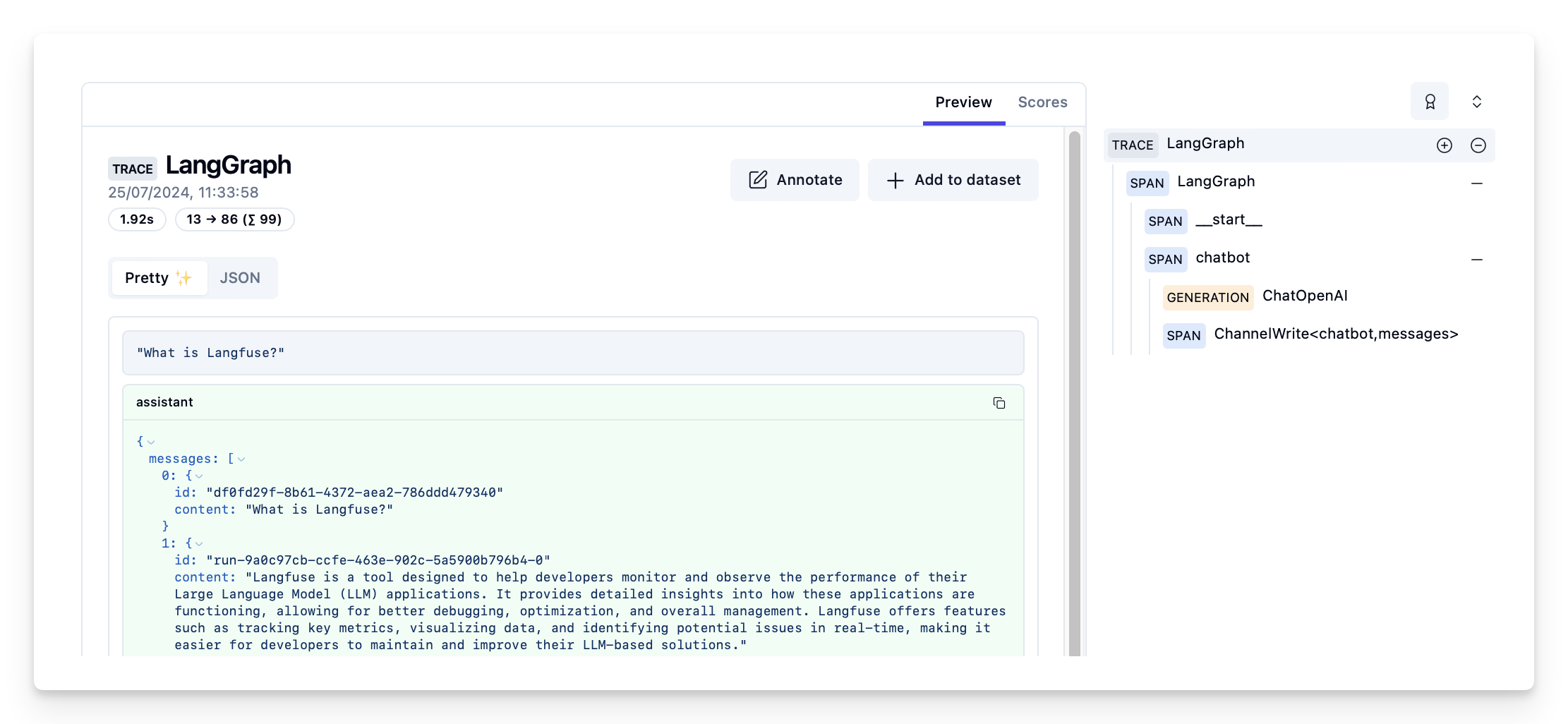

View traces in Langfuse

Example trace in Langfuse: https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/d109e148-d188-4d6e-823f-aac0864afbab

- Check out the full notebook to see more examples.

- To learn how to evaluate the performance of your LangGraph application, check out the LangGraph evaluation guide.